Portfolio

Neural Network accelerator

The project started as a heavily optimized neural network compiler when I joined the team. Due to the heavy optimizations, specifically tuned for networks in the DarkNet framework, the compiler was not robust for new networks defined in ONNX, tensorflow lite or pytorch.

I managed the transition of a complete in-house developed framework to a compiler based on the TVM framework. This transition was the base of a robust compiler and also led to an optimized design of the hardware. Within this new design, the accelerator instructions are more complex but for a single convolution, not more than 7 instructions are needed. In the first design, the number of instructions was dependent on the size of the input tensors, which led to a bottleneck on the memory bus.

The new design is also further optimized to cope with the depthwise/pointwise convolutions present in most modern backbones (eg. MobileNets)

During summer, I was asked to do a feasibility study for ISP pipelines to process and improve raw Bayer images. This led to the implementation of following networks on our accelerator:

Super-Resolution

Very simple network, which is basically an re-implementation of QuickSRNet.

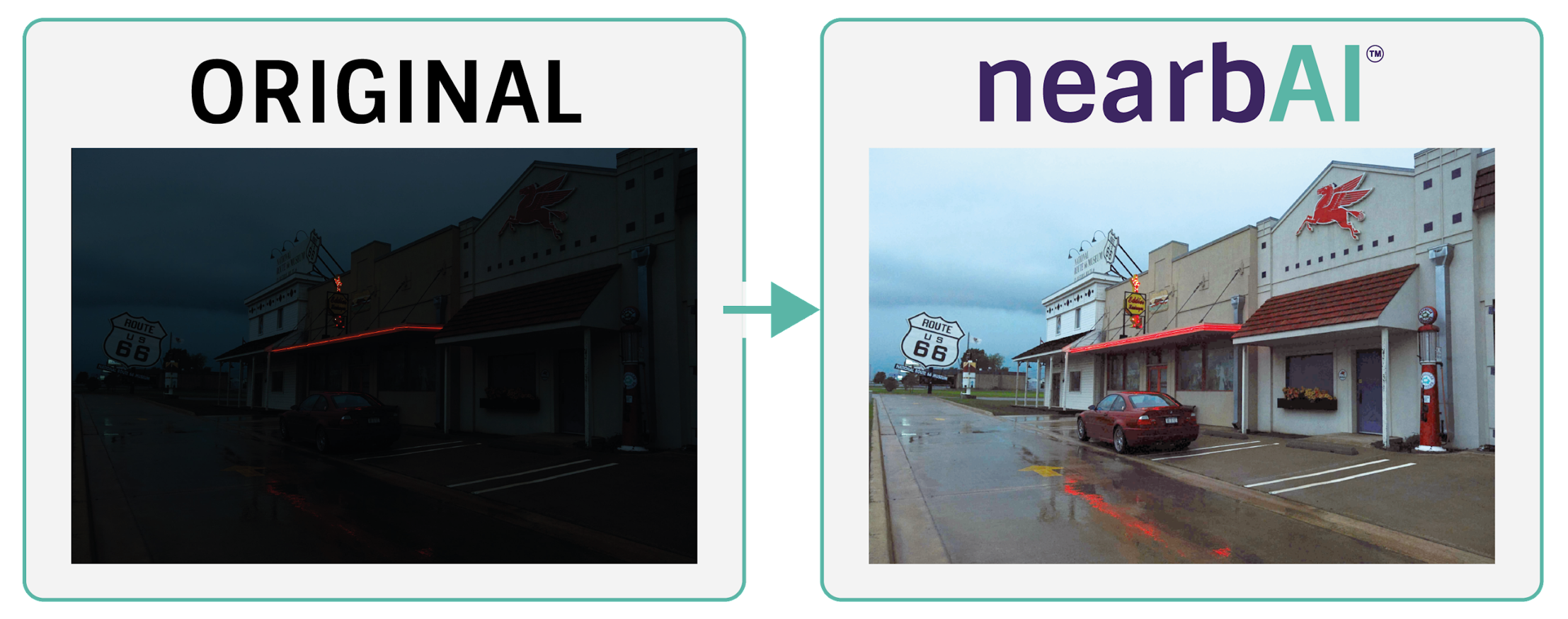

Night Vision

I am not entirely happy with the results of this IAT-based transformer network, but we needed a transformer network as example for the accelerator. This network works well for dark images, but if only part of the image is underexposed, the bright parts become overexposed.